2010 update:

Lo, the Web Performance Advent Calendar hath moved

Dec 6 This is the sixth in the series of performance articles as part of my 2009 performance advent calendar experiment. Stay tuned for the next articles.

Last night I talked about the benefits of reducing the number of page components and the resulting elimination of HTTP overhead. A little carried away into making the case (because, surprisingly, I still meet people that are not convinced about the benefits) I didn't talk about some of the drawbacks and pain points associated with reducing the number of components. Kyle Simpson posted a comment reminding that it's not all roses (also see his blog post).

On drawbacks

A lot of the activities related to performance optimization have drawbacks associated with them. That's the nature of the game. Rarely an improvement is a clear win. Usually you need to sacrifice something - be it development time, maintainability, browser support, standards compliance (reluctantly), accessibility (never!). It's up to the team to decide where to draw the line. My personal favorite type of optimizations are those that (for example image optimization) are taken care of automatically, by a build process, offline (as opposed to runtime), at the stage right before deployment. But this also has drawbacks - you need to setup the build process to begin with, a build process that doesn't introduce errors, doesn't require more testing, is easy and friendly to use, doesn't take forever and so on.

So, drawbacks.

Here's how Jerome K. Jerome jokes about trade-offs in his Three Men in a Boat:

"...everything has its drawbacks, as the man said when his mother-in-law died, and they came upon him for the funeral expenses"

It's never easy, is it?

Combining creates atomic components

Say you merge three JavaScript files - 1.js, 2.js and 3.js to create all.js. A few days later you change 2.js. This invalidates all the cached versions of all.js that existing users have in their browser cache. You need to create a new bundle, say all20091206.js. Then a few more days pass and you fix a bug in 3.js. Again, you need a new bundle. Than the library (1.js) comes up with a new verison and you upgrade - yet another bundle.

While first-time visitors benefit from the fewer requests, repeating visitors don't take much advantage of the cache with such frequent updates. In optimization we also say - set the expiration date in far future, but what's the point if you're going to change the component every other day.

One way to deal with this is organizational - set up a release schedule. You don't push that new feature today when you know that the library update is coming up tomorrow. Queue the changes and roll them out in batches (could be pre-defined intervals). This is important in larger organizations where more than one team may touch parts of the bundled components and push out the whole uber-component.

Another way is to identify which files change relatively often and put the movable parts in a separate bundle. This way you end up with one core, slow moving component and one that changes quickly. When you change it, you at least don't invalidate the core.

But in order to make such a call you need to measure and analyze. And answer the two questions:

- How many repeat visits do I have? Forums and online communities are more sticky for example. Other sites may be surprised how few visitors come with full cache.

- Which files to put in the changing bundle? Sure not all change at the same rate. Also the rate of changes also changes. e.g. a new feature matures and has less bugs, therefore requires less updates. When do I move a piece of new code into the slow-to-change core?

Facebook have gone a pretty scientific in this regard. David Wei And Changhao Jiang presented their system for monitoring the usage of the site and adaptively bundling features together. They show how they have (slide 26) total of 6 megs JS and 2 megs CSS. Merging all of that into one atomic component is unthinkable, so more intelligent methods are a must.

So the third way is to follow common user paths across the pages visited in a session and bundle together the files that the user is likely to need in his/her pattern of site navigation.

Yet another way to combine stuff is to have pre- and postload bundles. One has the bare minimum to get the user interacting with the page, the other has more of the bells and whistles.

In summary, the atomic components create challenges of their own. That shouldn't be used as an excuse though. As you see, there are ways.

Atomic bundles and personal projects

I doubt that the options above are really ever going to work for a personal project or a blog though. We're lazy people and since the site ain't broken... why fix it?

A personal project usually gets us excited initially and then the interest fades away for an extended period of time. If you don't change something now while you're into the project, then changes happen less often as the time passes. (You also forget how the code works and are reluctant to touch/break it)

A good strategy to optimize a personal project is to come back to it a week of two after a major update. Things are still fresh in your head, but if you haven't changed anything in two weeks, you probably won't touch it for another year. So 'tis the time for a one-off little project - turn on gzipping, minify CSS and JS (which otherwise you changed much too often a week ago), combine components.

Post-launch one-off optimization sprints don't work in bigger organizations and projects, but they can be a "cost"-effective way to optimize a small, personal site.

Merging components is an extra step

Scenario 1: why merge now when in the p.m. I'll work that thing again?

Scenario 2: I have to move to another task in the p.m. and I barely have the time to fix this bug before lunch, let alone to combine components and stuff.

Merging components is boring, there's nothing particularly challenging to it. How hard could it be?

$ cat 1.js 2.js 3.js > all.js

And it's an extra step.

Any extra work you introduce, any extra step has a great chance of being skipped. That gradually forgotten.

That's why there are two options for the optimizations:

- Make them easy to do. Otherwise no one's going to do it.

- Make them harder to not do it

What I mean is for example take the few minutes to create a script for this new project or feature. The script minifies, combines and pushes to the server. All that with one command line. And especially if you do it several times a day, you can keep one console open and just hit ARROW UP + ENTER to repeat the command after you're done with a change. Two keyboard strokes sure beats opening up an ftp program, logging in, navigating your HDD, navigating the server... tens of clicks easily.

To summarize: it's best not to underestimate the laziness, we shouldn't ignore the fact that we're lazy, but embrace it. Make the right thing easy and the wrong thing hard.

Run-time merging

It's best to keep the static components static. Static is simple, static is fast. But sometimes in the interest of merging, minification and so on in a very dynamic (or technically lacking) environment, you may resort to dynamic file bundling. Sometimes also the little pieces can be merged in so many combinations that dynamic components make sense.

Take for example the combo handler script used by YUI. You have a combo script at:

http://yui.yahooapis.com/combo

which simply takes a list of files to merge, finds them on the disk and spits them out with the correct Expires header.

For example here's a URL produced by the dependency configurator:

http://yui.yahooapis.com/combo?3.0.0/build/yui/yui-min.js&3.0.0/build/oop/oop-min.js&3.0.0/build/event-custom/event-custom-min.js&3.0.0/build/attribute/attribute-base-min.js&3.0.0/build/base/base-base-min.js

The little pieces that make up the YUI library can be combined in so many ways that pre-merging all possible bundles is not an option.

As part of YUI3, there was a PHP loader recently released which you can use to host your own combo solution - doesn't have to do anything with YUI. Or you can roll your own combo script, how hard could be to have a script that merges a number of files. Check Ed Eliot's script for inspiration/borrowing.

SmartOptimizer is another open source project that you can use for comboing, minification and so on.

If you do create your own combo script, don't forget to store the merged results in disk cache directory, so you don't have to re-merge the same bundles over and over.

CSS sprites are a pain

While combining text source files like CSS and JS is fairly trivial, creating sprites is a pain. Not only you have to create the image, but them get the coordinates and write the CSS rules. Then when an element in the sprite changes, it may affect the others and you may have to move them around, then update the CSS. Luckily there are tools.

Here are some tools and resources that should help with the sprites. CSS sprites are an excellent way to reduce HTTP components, especially when it comes to tiny icons, those that are sometimes smaller than the HTTP request/response headers needed to download them. It's a technique that is still underused.

- Intro to CSS sprites by Chris Coyier, includes a how-to for the SpriteMe tool

- SpriteMe - bookmarklet tool by Steve Souders

- Cool runnings - the sprite generation service behind spriteme.org

- SpriteGen - sprite generation tool

- SmartSprites is an interesting project - a downloadable tool that looks for specific instructions you leave in your normal CSS file

- Spritr - another online tool

And now, re-introducing CSSSprites.com 🙂

CSSSprites.com

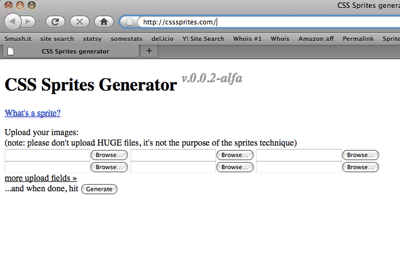

CSSSprites.com is a fun weekend project I did two and a half years ago. Back then I was even more convinced that this technique is wildly underused, still considered unstable by many people, although it was fearlessly in use by prominent sites such as yahoo.com. There was also a bit of lack of understanding how to build sprites and also, just like today, it's just a pain to create them.

So I wanted to raise awareness of the technique by creating an online tool for sprites generation, probably the first tool (which explains why I was able to get such a domain name). The idea was just to let people know that this technique exists, it's not rocket science and you can start quickly by using the tool. The tool was actually quite ugly and I posted a comment on css-tricks.com asking if anyone wants to contribute a skin.

CSSSprites.com: BEFORE

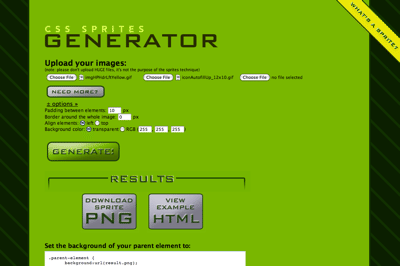

CSSSprites.com: AFTER

Luckily, Chris Coyier who runs css-tricks.com responded and sent me a skin. It was exactly 2 years minus 2 days ago.

(I remember January 2nd 2008 right after new year's, I was writing a list of things I want to accomplish in 2008. It was a list of unfinished projects actually. I decided I was sick of not finishing projects so 2008 would be the year of completion. I wasn't going to start anything new until I finish all abandoned projects. Needless to say, as all new year resolutions, it didn't work. I actually started something pretty cool and at the same time never finished csssprites.com with Chris' skin)

So today, after two years of procrastination, I sat down and updated the site. Additional kick in the behind was that few days ago I had to take the site down, because the exceptionally reliable host site5.com in their care for the well being of the sites on the shared hosting, shut down all my sites, including this blog, because csssprites.com was consuming too much resources. I had to take it down. Today it's proudly back up hosted by dreamhost, the all-hero host that survived the smush.it explosion.

What's new in the tool?

- nicer skin!

- the old "skin" is sill available

- some options - padding between sprite elements, background color, border size, choice of top vs. left alignment of the elements

- no more gifs are being generated

- the generated PNG is optimized with pngout and pngcrush

- upgrade to YUI3

So give it a spin and comment with any problems/wishes and so on. I haven't posted the code yet, it's not in a presentable statge, plus the "core" of it, the generation of the sprite image, is already out there.

That's all folks

When I talked about optimizing personal project above, I had in mind sites like csssprites.com. Currently it's not optimized at all - it's a sprites tool that doesn't use sprites. But that's because I only worked one evening on it and it's probably broken here and there. I intend to revise it in a few weeks when it stabilizes.

So, parting words - combining components into atomic dowanloads is not without drawbacks, just like most other performance optimizations. But this doesn't mean we shouldn't do it. Care and analysing users' behavior is a way to optimize the process of optimizing but meanwhile just go with your feeling and look at the metrics to see if you were right. Rinse, repeat. When in doubt - err on the side of less HTTP requests.